I have been managing high performance clusters since the beginning of my career. One of first tasks was to migrate a group of users doing radar simulations off of an old SGI cluster to an Apple XServe cluster (and more recently to COTS supermicros). So when an acoustic simulations group approached me to modernize their system, I was able to revolutionize their workflow.

I first spent some time to learn the applications and processes they were using, and making incremental and helpful improvements as we went by automating data extraction and visualization tools in python. When I transitioned them to use RHEL linux systems, overnight they saw a 5x performance improvement in their simulation times. Then I procured test bed servers, an infiniband switch, 3D NAND storage, GPU’s, etc to experiment with the technology and do a parameter study to find out what would give them the most bang for their specific applications. Within a couple months, I had optimized, spec’d out, and obtained quotes for the hardware to deploy a modest $600k HPC.

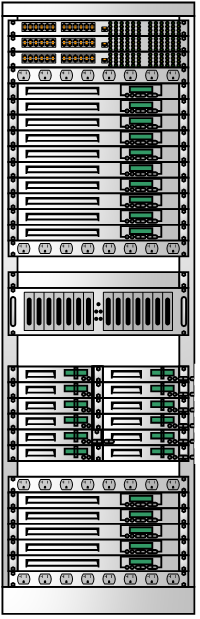

Once we racked, stacked, and wired the hardware, I utilized OpenHPC and other standard open source tools to configure the system and the diskless nodes. Mach1 (appropriately named as after the speed of sound) was born. Its initial setup was >750 Cores, >25 TB RAM, V100 GPUs, 100 GB Infiniband using a SLURM scheduler. The system was so successful that we had many groups coming to request processing time, so I worked with each group to setup a multitenant environment with usage statistics and cost sharing.

7 years later, the system has been so successful that the group is coming back to me to stand up Mach2 for them.